The Evolution of Design

Design has evolved significantly from graphic design and has branched into various fields. Today, UX/UI and product design play a crucial role in every company, focusing on creating better user experiences.

With the invention of touchscreen mobile devices, the digital world became widely accessible. Today, we see advancements in 2D/3D graphics, audio/video consumption, voice recognition, AR/VR, haptic feedback, sensors (such as iris and fingerprint scanners), and AI—all of which are shaping the future of design. AI can generate images, poems, screen designs, and ideas. In hardware, people are exploring innovations beyond just mobile and laptop devices.

The Future of Design: Innovation in Digital Space

Innovation in the digital space is evolving rapidly. As mentioned earlier in human psychology, our cognitive load (including memory, visual processing, and motor skills) influences how we interact with products. Vision is the dominant sense when it comes to designing for humans. For effective design, the visual cues should be compelling enough to invite touch and interaction.

Consider how we interact with mobile apps: first, we see the UI, read the text, and then engage using simple gestures like tapping, swiping, or pinching. These actions are efficient and require fewer mental resources compared to voice commands or complex gestures.

However, when we look at AR/VR, interacting in 3D space with gestures or voice commands can be much more demanding. For instance, imagine ordering 10 products in AR/VR—constantly waving your hands to rotate, zoom, or move the objects, all while dealing with potential eye strain from reading descriptions through AR/VR lenses. Additionally, switching devices, adjusting voice feedback, or reconnecting your devices creates friction in the experience.

Now, compare that to using a mobile device: browsing is easy, you can add products to your cart, and checkout with a simple tap. There’s no need for gestures or voice commands. Even when distractions arise, you can effortlessly return to where you left off. The simplicity of just using fingers and a touch screen makes mobile browsing a far more efficient solution.

The Case for Simplicity in Innovation

So, which is easier? A mobile device with minimal mental resources required, or AR/VR that demands much more cognitive effort, along with additional devices? The simplicity of using just a touch screen and minimal gestures is undoubtedly more efficient. Mobile devices allow users to interact with content seamlessly, without additional physical and mental strain.

Innovation in design should focus on simple solutions—those that don’t complicate the user experience or overburden cognitive resources. Replacing existing tools for the sake of innovation isn’t always the answer. Users will always seek alternatives unless the new solution offers clear, intuitive advantages that fit into their daily routines.

Can AI Replace Human Creativity?

Finally, a pressing question for the future: Can AI replace human experiences like daydreaming, mind-wandering, creativity, or even our senses of smell and taste? While AI can generate ideas, solutions, and even new designs based on available data, it remains uncertain whether it can replicate the richness of human experience. For now, we can only wait and watch as AI continues to evolve.

Designing Intelligence: How UX Shapes the Evolution of AI Chatbots and Virtual Assistants

In today’s world of AI-powered conversations, UX isn't just about making something look good — it's about designing meaningful interactions between humans and machines. Whether you're chatting with a basic FAQ bot or engaging in a fluid conversation with ChatGPT, user experience design plays a critical role in shaping how helpful, intuitive, and trustworthy these systems feel.

Let’s break it down across the three major types of AI-driven assistants — and how UX contributes at every level:

1. Rule-Based Chatbots: Getting the Basics Right

- Learning Type: Supervised Learning

- Example: Old-school FAQ bots, bank chatbots, or customer service bots

These bots work based on predefined rules and question-answer pairs. They’re trained using manually labeled data and only understand what they’re explicitly told.

Where UX Matters:

- Clear decision trees and user flows

- Context-aware responses (e.g., “Did you mean…?” fallback)

- Compact, goal-driven UI (buttons, quick replies, escalation points)

2. GPT-based Chatbots: The Conversational Leap

- Learning Type: Self-Supervised Learning

- Example: ChatGPT, Bard, Claude AI

These models don’t require labeled training data. Instead, they learn by predicting the next word in vast amounts of text — enabling human-like, flexible conversations.

Where UX Matters:

- Prompt design: guiding users to ask the right type of question

- Personality and tone: crafting the bot’s “voice” for brand consistency

- Conversation memory cues, visual history of past interactions

- Preventing hallucinations or misleading answers with clever microcopy

3. Smart Virtual Assistants: Learning from Life

- Learning Type: Unsupervised Learning

- Example: Advanced Alexa, future Google Assistant, personalized Siri

These assistants learn without labels. They observe, analyze, and adapt to user behaviors, routines, preferences — all on their own.

Where UX Matters:

- Privacy transparency: show users what’s being learned and how

- Predictive UX: offer actions before users ask (e.g., “Leaving for office?”)

- Seamless multi-modal interaction (voice + screen + touch)

- Trust-building through explainability and subtle feedback

Final Thoughts

Whether it’s a rigid rules-based bot or a hyper-intelligent assistant that learns your daily routine, UX is the bridge between AI logic and human emotion.

AI learns patterns. But UX ensures it understands people.

That's where the real magic happens.

How AI is Shaping the Future of UX Design

Artificial Intelligence (AI) is no longer a buzzword — it's transforming how we design, test, and deliver user experiences. As technology evolves, the role of a UX designer is shifting from just designing screens to designing intelligent systems that learn, adapt, and respond.

From automating user research to generating UI layouts, AI is unlocking new possibilities in how we understand user behavior and deliver value faster. But what does this really mean for the future of UX design?

Designing with Data, Not Just Assumptions

Traditional UX relied heavily on interviews, surveys, and usability testing. While still important, AI tools can now analyze user behavior at scale — in real-time. Tools powered by machine learning can detect patterns, predict drop-offs, and recommend design changes based on real usage data.

This means designers can make informed decisions faster, and iterate based on insights, not just intuition.

AI-Driven Personalization is Raising the Bar

Modern users expect digital products to be smart and relevant. AI enables hyper-personalized experiences — recommending content, changing UI layouts, or even adjusting workflows based on user history or preferences.

For UX designers, this introduces a new design layer: not just static screens, but dynamic, adaptive systems that change per user.

From Wireframes to AI-Assisted Prototypes

AI is speeding up the design process itself. Tools like Uizard, Visily, or Figma plugins can now turn text prompts into wireframes or generate design alternatives in seconds. Designers are starting to work alongside AI — co-creating layouts, components, and even UX copy.

While AI helps with speed and scale, it’s the human designer who ensures clarity, empathy, and usability remain intact.

Ethical Design and the Human Touch

As AI becomes more embedded in UX, ethical concerns around transparency, bias, and data privacy grow. Designers now have the responsibility to make AI-driven interactions feel safe and trustworthy.

UX isn’t just about making things work — it’s about making them right. Designing for explainability and consent is part of the future UX toolkit.

The Role of UX is Evolving, Not Disappearing

AI will not replace UX designers — but it will change what they do. The future of UX is about designing systems that learn, not just layouts that look good.

UX designers will become orchestrators of intelligent experiences, blending creativity with data, emotion with logic.

Final Thoughts

The UX of tomorrow will be driven by intelligent systems, but grounded in human needs. As AI continues to evolve, designers who understand both technology and empathy will shape the most meaningful digital experiences.

The tools may change, but the goal remains the same: create experiences that serve users — now, faster, smarter, and more personally than ever before.

UI Patterns in Enterprise UX Design

Designing for enterprise applications is vastly different from consumer-facing products. These systems often support complex workflows, large data sets, and multiple user roles. That’s where UI patterns play a crucial role — they bring consistency, efficiency, and scalability to UX in enterprise environments.

Why UI Patterns Matter in Enterprise Apps

Enterprise tools are used daily by professionals to complete mission-critical tasks. Unlike trendy consumer apps, they must prioritize function, clarity, and reliability. UI patterns help teams:

- Maintain consistency across complex modules

- Reduce cognitive load with familiar layouts and interactions

- Improve development speed by using reusable components

- Support training and on boarding through predictable behaviors

Common UI Patterns in Enterprise UX

1. Dashboards

Dashboards present high-level overviews with actionable insights. Good dashboards use cards, tiles, or widget layouts to surface key metrics or recent activities without overwhelming the user.

2. Data Tables

Tables are the backbone of many enterprise tools — for inventory, transactions, records, etc. Key enhancements include column sorting, inline editing, batch actions, and filtering.

3. Modal and Slide-Over Panels

These allow users to perform quick actions (like editing or creating records) without leaving the current page. Slide-over panels are especially helpful in preserving context.

4. Multi-Step Forms (Wizards)

Used for onboarding, setup, or long processes, multi-step forms break down complex tasks into digestible parts. A clear progress indicator and saved states improve usability.

5. Filter & Search Panels

Enterprise users often work with thousands of records. A well-placed filter sidebar or dynamic search bar makes data retrieval quick and intuitive.

6. Tabbed Navigation

Tabs help manage related content in limited space, especially in detail views like user profiles, project dashboards, or record editing.

Designing with Reusability and Scalability in Mind

In enterprise UX, patterns must be designed for scale. Components should support:

- Accessibility across devices and screen sizes

- Customizability for different business roles or permissions

- Performance optimization for large datasets

- Consistency across internal tools and platforms

UX Designer’s Role in Pattern Libraries

UX designers in enterprise environments often work closely with design systems and pattern libraries. Their role includes:

- Defining usage guidelines for each component

- Testing patterns across multiple contexts and flows

- Collaborating with developers to ensure fidelity and performance

- Documenting interaction logic, states, and accessibility behaviors

Final Thoughts

UI patterns in enterprise applications aren’t just design choices — they are strategic building blocks. When used effectively, they reduce complexity, support scalability, and empower users to focus on tasks, not tools.

For UX designers, mastering pattern-based design is key to delivering intuitive, high-performing enterprise systems that work across teams, tools, and time.

AI in UX Research: Speeding Up User Insights Without Losing Empathy

UX research has always been about understanding people — their needs, pain points, and behaviors. But with the rise of AI tools, the way we conduct research is rapidly changing. The good news? We can go faster, analyze deeper, and still stay human-centric.

The Traditional Research Bottleneck

Interviews, usability tests, surveys — they’re effective, but time-consuming. Transcribing audio, analyzing patterns, tagging observations — these manual tasks slow down delivery and delay decisions.

In fast-paced product cycles, this becomes a bottleneck. Teams need insights fast, but speed often means skipping important discovery work. That’s where AI steps in.

Where AI Accelerates UX Research

- Auto-transcription: Tools like Otter.ai or Notably transcribe interviews in real time, saving hours of manual effort.

- Sentiment analysis: AI can detect tone, emotion, and attitude in user responses to uncover hidden patterns.

- Clustering themes: Machine learning algorithms can group user feedback into topic clusters — helping identify common insights faster.

- Survey summarization: AI tools can summarize open-ended survey data into digestible summaries without losing context.

Does Faster Mean Colder? Not Necessarily.

The fear with AI in UX is that automation might strip away empathy — turning humans into data points. But when used right, AI doesn’t replace human understanding — it augments it.

Designers still need to ask the right questions, interpret context, and understand emotions. AI just handles the repetitive grunt work so that humans can focus on connection.

Empathy + Efficiency = Better Design Decisions

When you combine AI speed with human empathy, you get powerful outcomes:

- Faster insights without skipping discovery

- More time to focus on patterns, not paperwork

- Informed decisions backed by both data and emotion

This hybrid approach brings balance: AI does the heavy lifting, while UX researchers bring the heart.

Recommended AI Tools for UX Researchers

- Dovetail: Tag, highlight, and analyze user feedback with AI support.

- Notably: Organize research data, transcripts, and themes with smart insights.

- Maze + AI: Automate usability test result summaries.

- Looppanel: Real-time AI summaries of interviews and usability tests.

Final Thoughts

AI won’t replace the UX researcher. But it will change how we work. The future of UX research is not just faster — it’s more scalable, inclusive, and focused on what really matters: the user.

Speed doesn’t kill empathy — it frees it. By letting AI handle the boring bits, we can spend more time connecting, listening, and designing with purpose.

AI-Generated Design Systems: Can Machines Understand Brand DNA?

Design systems bring consistency, speed, and scalability to digital products. But as AI tools begin to generate entire UI kits, components, and visual styles — a question arises: Can machines truly understand a brand’s personality, voice, and values? In short — can AI reflect a brand's DNA?

What Are AI-Generated Design Systems?

AI-powered tools can now auto-generate design tokens, suggest component libraries, and even draft UI patterns based on a few prompts. Given a moodboard or a few keywords, some tools can create brand-style guides in minutes.

Tools like Uizard, Galileo AI, and Penpot with AI assist aim to speed up the creation of design systems — often replacing weeks of manual effort.

The Benefits: Speed, Scale, Consistency

- Rapid prototyping: AI can generate UI mockups that follow basic design principles quickly.

- Design system scaffolding: Color palettes, typography rules, button styles, and layout grids can be generated instantly.

- Consistency enforcement: AI models can flag visual or interaction inconsistencies across screens or platforms.

The Challenge: Interpreting Brand Identity

While AI can follow rules and patterns, brands are not just pixels and palettes — they are emotional, cultural, and strategic. A brand’s DNA includes tone, voice, values, and legacy — things that are difficult for machines to fully grasp.

An algorithm might know that a fintech app should look “trustworthy,” but does it know how that trust is culturally expressed in design across markets or over time?

Design Systems Require More Than UI

A real design system is more than components — it’s about:

- Design principles and intent

- Voice and tone guidelines

- Accessibility and inclusion patterns

- Emotional resonance and user trust

These are deeply human aspects. AI can generate visuals, but **brand soul still needs human designers**.

Can AI and Designers Collaborate?

Yes — and that’s where the opportunity lies. Designers can:

- Use AI to generate rough drafts or starter kits

- Review and refine outputs to match brand identity

- Train models on past design systems to improve alignment

- Free up time to focus on narrative, storytelling, and values

Think of AI as a junior design assistant — fast, helpful, but in need of creative direction.

Final Thoughts

AI is changing how we build design systems — bringing speed and automation to a process that was once slow and manual. But until machines can feel, reflect, and express emotion — **brand identity remains a human domain**.

Designers who blend the speed of AI with the soul of design will define the future of meaningful digital branding.

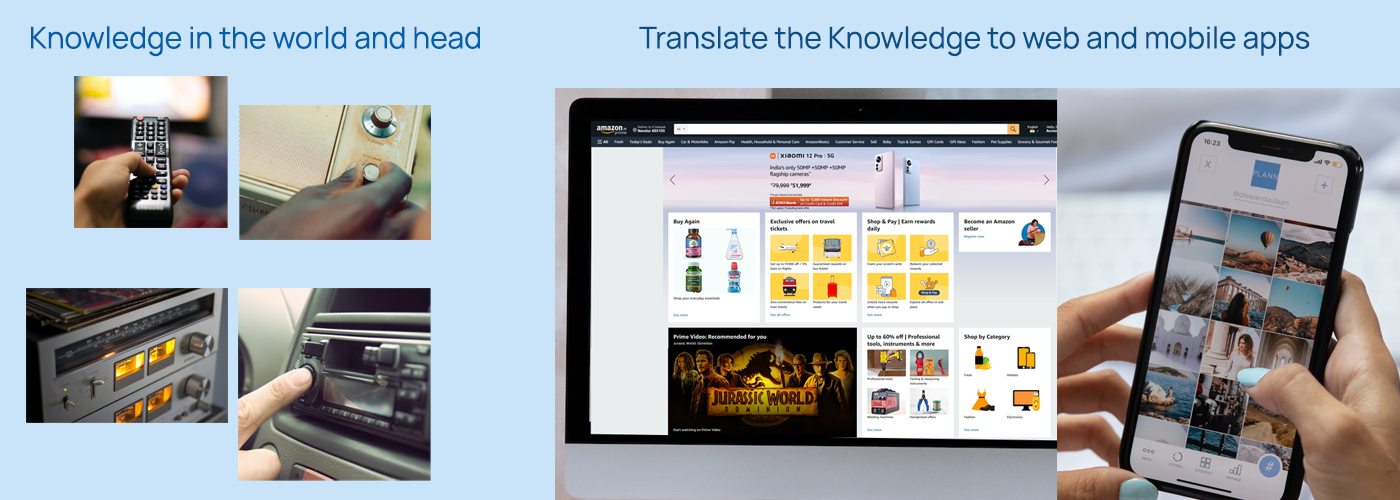

Interaction Design: Knowledge in the world and head

The Knowledge in the world is how we perceive and interact with the objects present in the world around us. The picture in the left below shows chair, table, kettle, door handle and water jug / pitcher, which has perceived affordance's(what actions are possible) and signifier's(where the action takes place) to help us interact with the objects. This works at subconscious level which is called Visceral Processing inside our human brain (Human cognition and emotion).

Knowledge in the head is memory. The skills we learn and practice are stored in our memory. This too works at subconscious level which is called Behavioral level processing. Once we learn and practice for more number of hours it's stored in our memory and we do tasks effortlessly.

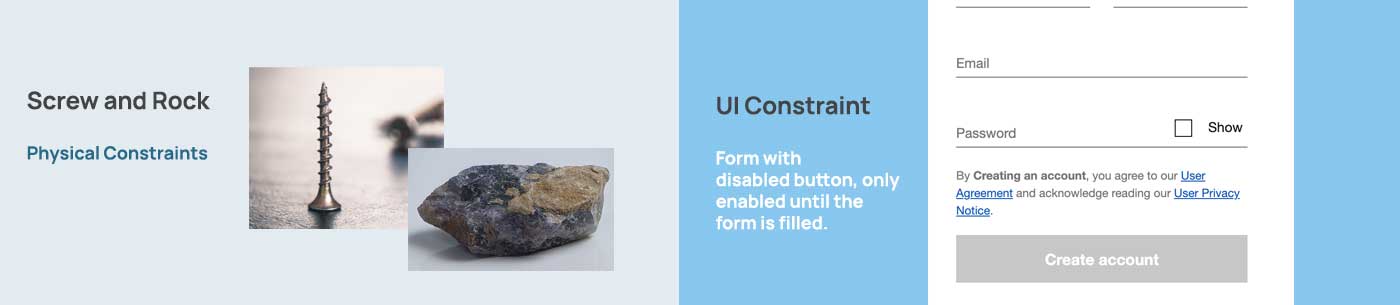

We need both Knowledge in the world and Knowledge in the head, which determines our behavior. When the knowledge is readily available in the word to perform a task, the effort to learn is reduced. Consider a screw, it has projections, you can remove it or replace it effortlessly. The knowledge of removing and replacing is easy because we encounter screws in many of the objects such as chair, shelves, table, drawers and even toys. We know how screw works so the learning is reduced while replacing or removing. Now lets consider playing a musical instrument, the effort put to learn the instrument is high. But once we learn and practice more it stores in our memory and then it becomes easy to play the musical instrument.

Applying the above principles to Apps and Web application

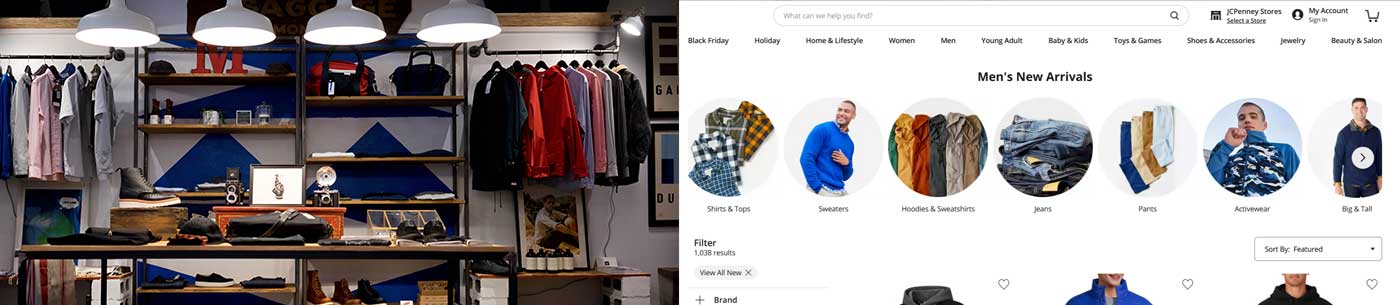

A good design is about discoverability, understanding and having a good conceptual model(something to work). Users spend lot of time with the objects around the world and have a mental model(something will work) in their mind. The expectation the user's have while interacting with a product is based on the knowledge they have. Its important that we design the conceptual model based on this principle.

People use a product and expect how it should work.

Based on past experience and mental model, people while interacting with a product have expectation how something will work. Discoverability, based on affordances and signifiers like buttons, labels, pictures and links people start interacting using click or swipe gestures to complete the task. Understanding, what does the settings mean?, how to use it?, what each functionalities mean? and how to accomplish the task?

When the expectations do not match users get frustrated and they are unlikely to visit or use the product. Users go elsewhere like other website or apps to get the task completed. User’s who try to use conscious(Reflective Processing) effort to complete a task use lot of mental resources which make them feel uncomfortable in real world by comparing what has happened and with previous experience or reasoning the outcome. Knowledge in the world and head creates a behavior pattern in users. When user confronts a task to be completed, if it is done without much mental effort(subconsciously), the task is easy to accomplish.

Knowledge of and Knowledge how

Knowledge of, is declarative knowledge (rules and facts). Like, ‘Capital city is Delhi’. ‘There are Seven days in a Week’. ‘Earth Revolves around Sun’. ‘We cross the road using Zebra Crossing’. People use this knowledge to understand what is true and what is not true. And rules are not followed everywhere in the world.

Knowledge how, is procedural knowledge. Learning to play a music instrument, catching a ball when it is thrown at you, answering questions when your skills (be it mathematics, science) is tested with much ease. Procedural knowledge is stored at subconscious Level.

Knowledge in the World, is signifiers, affordances and actions takes that place based on physical constraints. Knowledge in the head, is conceptual model, logical constraints and similarities between what has happened and previous experience. We need both, it’s ineffective when one is left out.

Design Psychology: Using Psychology while designing UX for your products.

Knowing Psychology about users is time worth spent in designing your User Experience. As the digital world is moving fast at a greater speed, understanding the user’s behavior is more important.

As per psychology the human brain consists of more than 80-100 billion neurons, some of them waiting to be wired with new experience that stores as new memory. Every day from the day we are born our brain senses a new smell, sight, touch, sound and taste. Digital world as taken over our lives, we have lot of gadgets around us consuming digital data. So how to design a product with help of psychology that stands out of crowded apps or website. Using Psychology in our User experience design can bridge the gap between your user and product.

Memory – Repetitive action makes memory stick.

A lot of mental resources in our brain is processed due to memorizing things. A normal user remembers only four items at a time. Repetitive action makes memory stick. So, placing a home button in the same place in your website/app makes life easier for the user. Even consistent use of phrase of the button makes the user predict the action of the button before he clicks.

Thinking – Mental model and Conceptual model

When a new product is given to user, based on the mental model the user starts interacting with it. We designers design conceptual model based on assumptions and try to fit the mental model by using task analysis, persona and user journey to validate the mental model while designing the interface. So, understanding the mental and conceptual model makes life easier. Ex: Designing an Online form (conceptual model) with bit-sized chunks and grouping related items like the manual form (mental model) makes life easier for the user.

Read – Capital Letters, Small Letters and Length of Text.

Using capital letters and small letters forms a pattern within the user’s mind. It’s a myth that people can’t read capital letters easily. Both capital and small letters are easy to read by reducing the length of the text between 72 to 100 characters. Aren’t we used to read newspaper? And varying the font size forms a hierarchy for the users.

Seeing – Peripheral Vision and Central Vision.

We see objects more using Peripheral Vision than using Central Vision. While we walk in traffic, we tend to see the obstacles that come our way by using peripheral vision. So, while browsing a website/app we tend to see more of visual cues using peripheral vision. Visual Cues can be colour, button, links, and images. So, designing visual cues to bring user attention is more important to invite interaction.

Attention – Danger, Food, Sex, Movement, Faces and Stories.

The chemical dopamine in our brain brings attention to danger, food, sex, movement, faces and stories. Using error messages with red colour, images of food, images of celebrity and animation in screen brings more attention to the user. Ex: Notifications popping at your right bottom of your monitor screen (Movement).

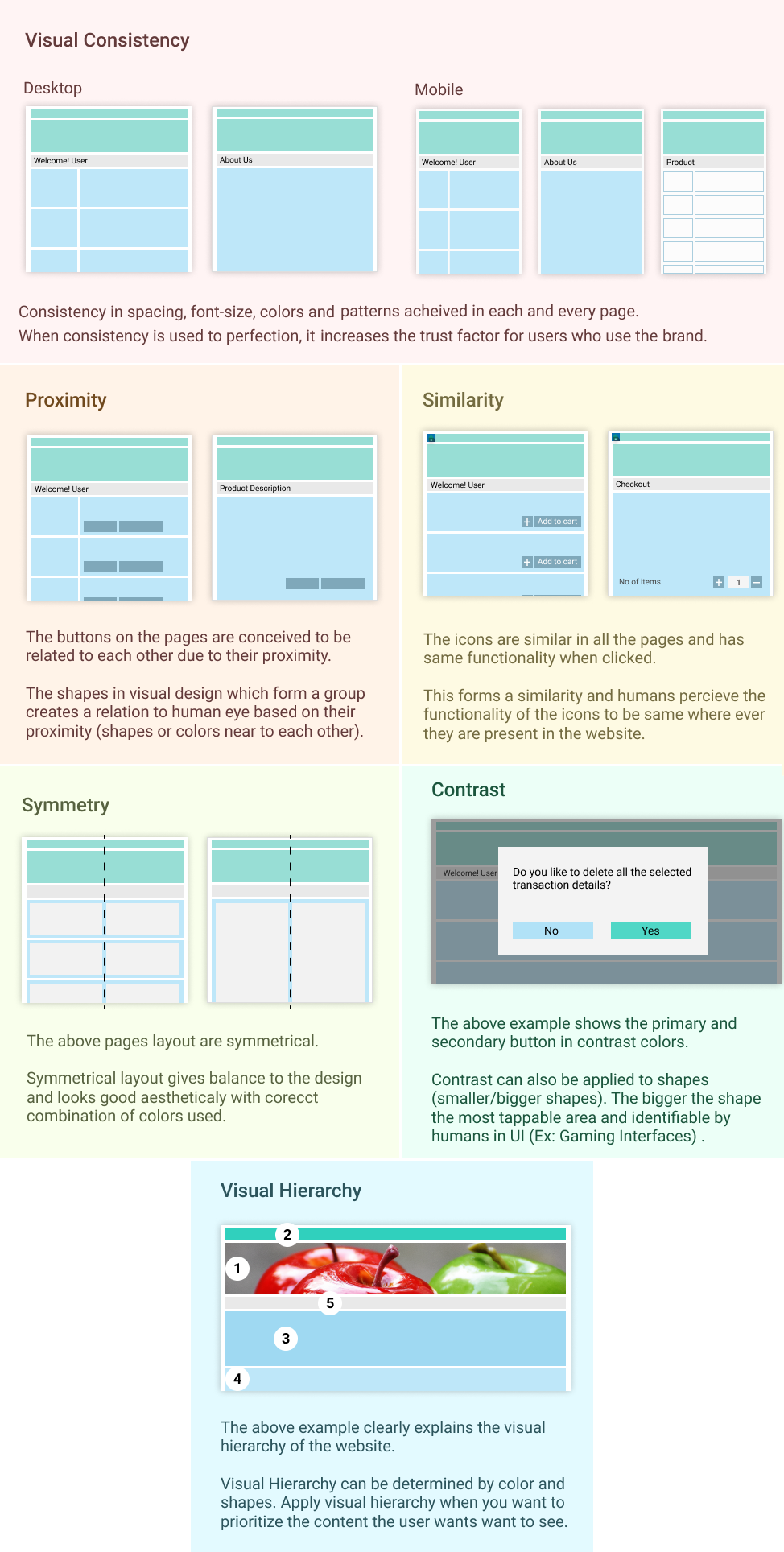

Visual Design Principles

Visual Consistency, Proximity, Similarity, Contrast and Visual Hierarchy are some of the principles that can be used to enhance the visual identity. While designing any design system, take these principles into considerations.

UX: Fundamental principles of design

As a designer when user interacts with any product we need to understand the actions for the questions from user prespective like, What do I want to accomplish?, What are the alternate action sequences?, What action can i do now?, How do I do it? What happened? What does it mean? and Have I accomplished the goal?. The below design principles when applied answer the user's question while designing a product.

Discoverability

When the user sees a Mobile app or Website can he determine what actions are possible and understand the current state of the app.

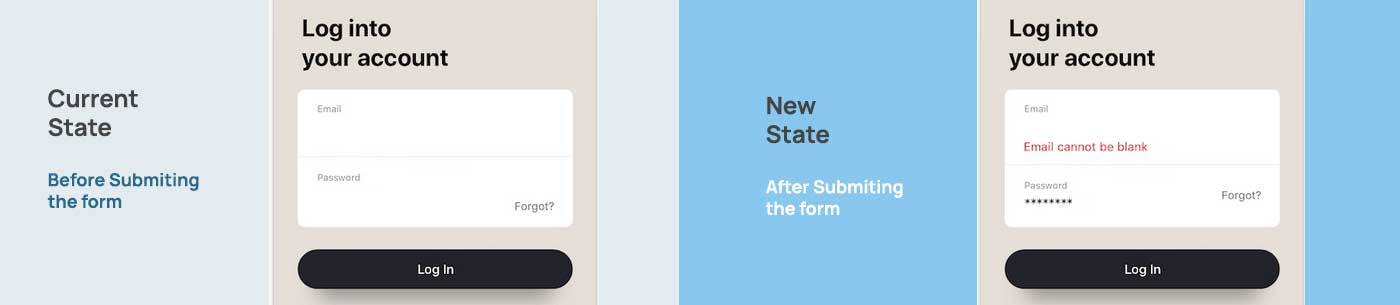

Feedback

Feedback should inform the user about the current state of the app and the new state after executing the action of a task performed by user.

Conceptual Model

All the information needed to create a good conceptual model should be taken care while designing a app leading to discoverability, understanding, feeling of control and evaluate the results based on expectations.

Affordances and Signifiers

Affordances: What are the proper Affordances exist to make desired actions possible within the app?. A button in the app. Signifiers: This ensures discoverablity of what to do and where to do. Like label in the button.

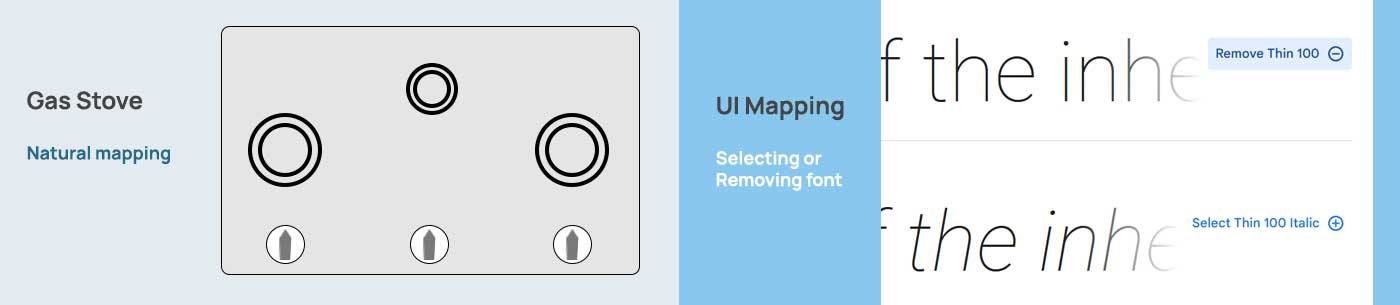

Mappings

The relationship between controls and their actions follows the principles of Good mapping with enhanced layout and placing UI elements near intended actions.

Constraints

Constraints can be physical(projections of screw or rock or chair), logical(specific action needed for the specific context), semantic(arranged in order), and cultural(manners observed while in lift - being quiet or shouting) constraints. While designing for apps we should provide constraints which guides actions and eases interpretation

UX: Context is everything

When designers asked about how do you solve the problem while designing user experience. The answer to most of the questions is "It Depends, based on context". As a a designer Understanding Context when creating User Experience design for products is a most essential skill to be learnt. When doing user research, creating IA or creating wireframes context play a important role

Contextual Inquiry in User Research

Observing user how a task is performed in their natural environment, what behavior patterns do they use to perform a task, do they use any physical items like pencil or paper to take notes, what devices do they use, do they sit or stand, do they sit in a office or daylight or busy environment, do they walk and use mobile or sit before a computer to perform the task. All these observation is part of contextual inquiry. "Designing for context" helps us to create better products.

Context while creating Information Architecture

While creating IA, we need to understand how user navigate through a product. IA should help the user to achieve the goal or task while providing correct information at the specific context. Removing, adding content and does the content makes sense for the current context of the task helps user to perform their task with ease. While designing IA we need to understand context from user perspective like,

- Where am I?

- How did I get here?

- Where do I go from here?

- What can I do here?

Context while creating UI Design

While creating UI we need to make sure based on the context of the task we need to place the UI elements in a page. We can disable or enable the UI elements based on context while user is performing the task(Progressive disclosure - show/hide content or UI only for that specific context). When we design for context we can understand which UI elements or UI patterns are needed for the specific page.